It's hard to remember what a developer was working on last week, let alone 9 months ago. Because it takes so long to research what was happening beyond the last 3 months, the potential for an annual review to improve outcomes is too often squandered. Which is to say, the "6-12 months ago" range of time is almost never discussed during a traditional developer review, because it has traditionally been way too time consuming to browse through Jira tickets or commits from several months back.

Using GitClear, it only takes about five minutes to glean all the information necessary to spark a healthy conversation about how the last year has unfolded. Here are the three reports that we recommend grabbing before you begin the annual review discussion. Remember to filter each of these reports on the individual developer participating in the annual review discussion.

link1. What were the banner projects from the past year?

link

In the "Issues & Defects" section of GitClear, the "Biggest Issues" tab will render a list of the biggest ticket that the developer worked on for each week of the past year

It’s easy enough to recall that large project that a developer worked on last month, but how about last spring? What were they working on then, and what exactly did those projects entail?

📍 The "Biggest Issues" list can lead toward many types of productive conversations:

For big issues that were launched 6-12 months ago, what has been customer and/or developer reception to the work? Has it been a source of bugs, or a source of praise for how much it improved some customer problem?

For big issues that were worked on within the past six months, how did the time estimate for issues compare to the observed time for them? Is there tech debt that could be paid down in order to help future estimates grow more accurate? How was the initial quality of the work evaluated by other developers?

Were there any interesting factors at play on the weeks where less got done? Could the manager have helped the developer to remove obstacles on those weeks? Could the developer have benefitted from asking for help (or asking sooner)?

The Biggest Issues tab is a perfect destination to incubate a granular conversation based around specific situations.

link2. How collaborative of a year was it?

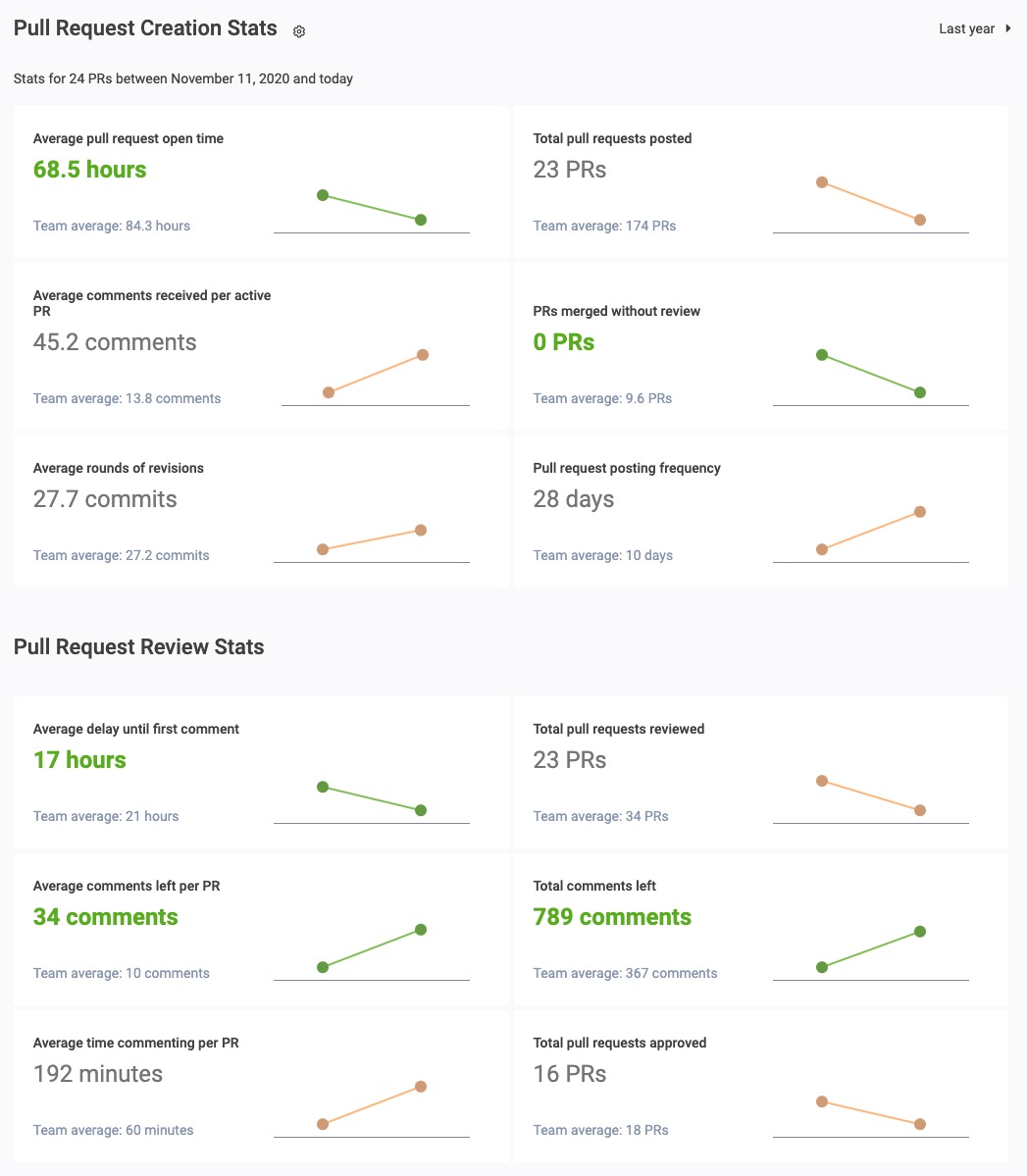

Pull Request Collaboration Stats, when focused on an individual developer, help identify how engaged the developer was with the review process

To what extent has the developer participated in formal code review? For teams that use a pull request-based workflow, these stats offer talking points in part because they are a reflection of time & effort, which are generally mutable quantities.

📍 Productive conversations that can follow from looking at the Collaboration Stats:

How has the comments left and received per PR compared to team averages? Are those numbers at a place that seems desirable to both parties?

Has the time spent commenting per PR felt like a productive investment, relative to the time that could be spent on one's own coding? Are there aspects of the developer's PR review that could be automated, so they would need to spend less time on manual review?

How has the average round of revisions compared to other team members? Seeing 10+ rounds of revisions can be a warning that the developer could benefit from greater preparation prior to submitting their PR for review

link3. [For first year hires] Have we done enough to help the new developer ramp up quickly?

link

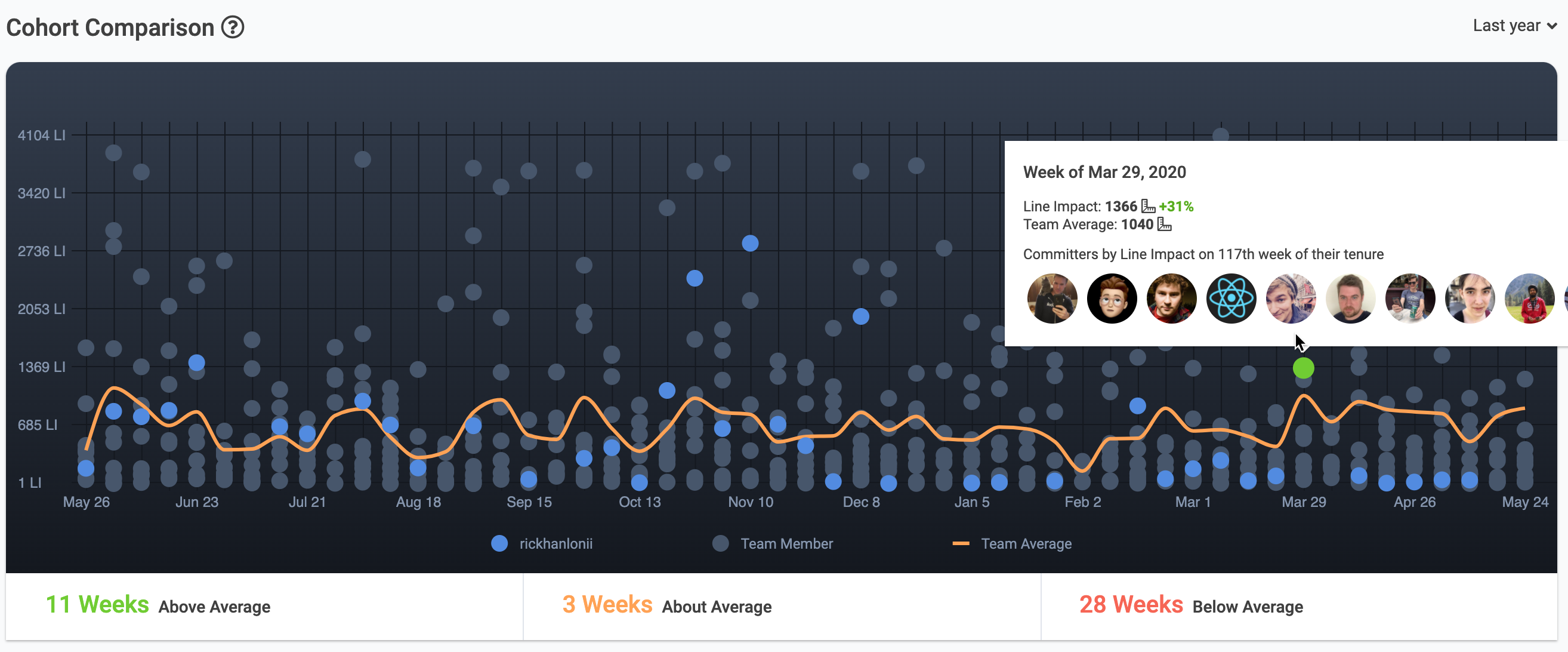

The Cohort Comparison graph illustrates how quickly the developer has been onboarding relative to past hires at a point of equivalent tenure (i.e., how did everyone fare on the 20th week?)

Smart managers want to help every developer ramp up faster than the one before. This is possible with sufficient dedication to test coverage, documentation, and tech debt removal. The Cohort Report offers a lens to open discussion around how these first 12 months chart relative to past hires from the same cohort (i.e., their first year at the company).

📍 Some productive conversations that can the Cohort Report can bring about:

How many weeks passed before the developer ascended to their productive peak? What could management have done to have made this process faster? Was there adequate documentation that was easy to locate? Was there clarity about initial objectives?

Once the developer reached their productive peak, did they continue at that level, or was there continued variability? Are there steps that could be taken to remove obstacles to consistent weekly output?

Which past developers tended to show up alongside the weekly output of the developer being reviewed? What path did those past developers end up following, and can that help instruct what types of tickets or management style would be best for the new hire?

If you are a manager in these conversations, put yourself in the developer's shoes and admit that Line Impact does not tell the entire story of what was getting done in a given week. Their total impact to the team's dynamic includes many other factors, and the clarity of their leadership direction is a principle determinant of the results that follow.

linkFor those with more than five minutes to prepare

If you have more than five minutes to prepare for your annual review, here are some other reports to stuff in your quiver.

linkBonus report: What other skills does this developer contribute to the team?

link

A collection of badges that can be earned over time

Code metrics don't always tell the whole story. Perhaps someone on your team is taking time to mentor junior developers, or putting the extra effort into automating a repetitive task. These are important things to note in performance reviews as well.

With this in mind, we created Badges. Who doesn’t like collecting awards?

Badges are a great way to give recognition for the exemplary and varied work done by your team. There are two types: auto-assigned and admin-assigned.

Auto-assigned badges are awarded by GitClear when the developer meets a certain criteria. Some examples:

Parachute: Directed at least 10% of a month's code toward writing tests

Linguist: Contributed in more than 5 languages over the month

Honor Roll: Held the top spot on the leaderboard over 5 straight business days

Managers can also award badges to developers as a way of recognizing achievements, or for going above and beyond the call of duty. Some examples:

Sage: Mentored a junior dev in a meaningful way

Timesaver: Automated or toolified a repetitive task

Swan: Refactored code that used to be ugly

Badges help developers feel recognized, and they give managers an easy way to track extra efforts and milestones throughout the year.