The cornerstone of GitClear's AI measurement suite, the "AI Cohorts" tab brings into focus how "the most active AI developers" on the team are performing relative to less-active AI users.

GitClear recognizes five AI cohorts:

Non-user: No record of AI use. If they are using AI, it is not reported by the AI providers you have connected

Sparse user: Uses AI once or twice per day

Periodic user: Uses AI a few times per day

Regular user: Uses AI roughly 5-10 times daily

Power user: Uses AI throughout each day

Each cohort is evaluated & assigned on a weekly basis. So, if a developer recently discovered Claude Code, they might have registered as a "Non-user" or a "Sparse user" in past months, but they might transition to a new tier of cohort when they begin interacting with AI more frequently.

As with GitClear's AI Usage Charts, the AI Cohort charts are built to work with GitHub Copilot, Cursor, or Anthropic/Claude Code, so long as you have centralized your developer access via a business account. Most of the AI providers set a minimum of "5 active users" on the business account in order to report stats.

linkAI Cohort Graphs Available

Since it's possible to make a cohort graph out of any metric among more than 50 that GitClear tracks, this list is likely to grow as customers write to us to request more data.

linkDiff Delta by AI Cohort

How much meaningful, durable code change (the 3% of changed lines that matter) has been authored per developer?

A common pattern among terms measured: the Power User cohort has steadily gained ground to become the most prolific authors of durable code

Like Commit Count, Diff Delta is a metric that correlates with Story Points completed. When a developer has more Diff Delta, they are authoring more durable (non-churned) code, especially deleting or updating code that has been unchanged for a year or more. The degree to which your "heavier AI use" cohorts take the lead in Delta is the degree to which you may be able to accelerate your rate of progress by embracing their methods.

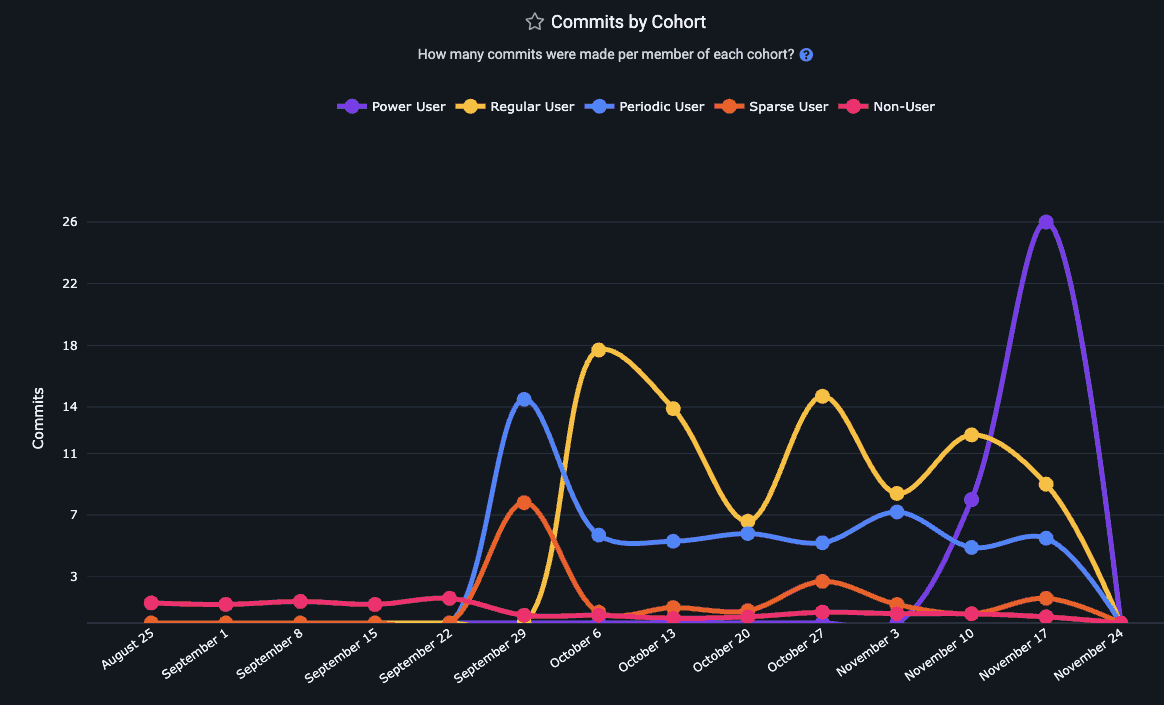

linkCommit Count per AI Cohort

A conventional productivity metric that has shown pronounced growth during the AI era, the Commits by AI Cohort reveals the extent to which AI usage is allowing developers to author a greater volume of commits.

As with most all the Cohort graphs, the values here are per developer in the cohort, so a higher volume of Commit Count in the higher-tier cohorts suggests that your most active AI users are also your most prolific committers.

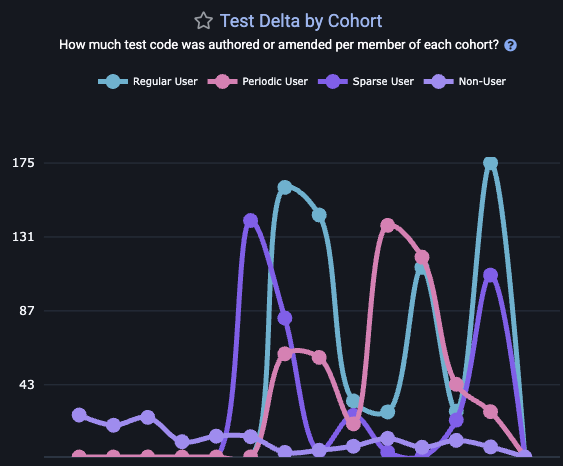

linkTest Delta per AI Cohort

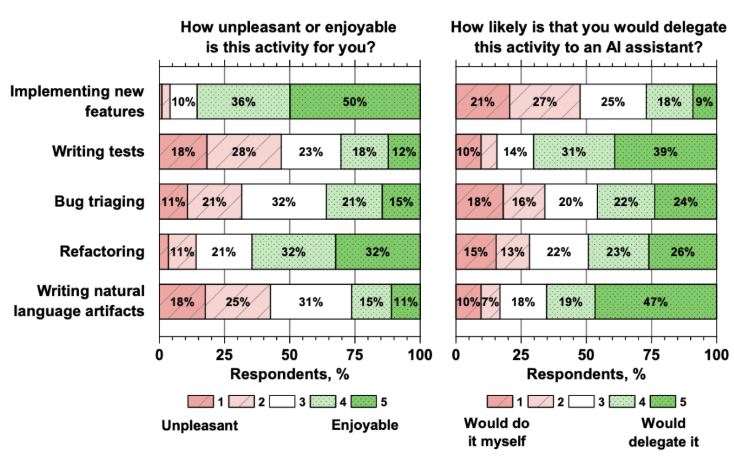

One of the specific areas that developers seem most willing to delegate to AI is tests. According to 2024 research from Jetbrains,

The only thing that developers like less than writing tests is "Writing natural language artifacts." As such, "writing tests" stands apart from the pack in terms of developers' willingness to delegate it to AI. It is GitClear's position that there are two more reasons developers should be more willing to delegate -- at least first-pass test-writing -- to AI. When it comes to "greatest weaknesses" of AI circa 2025, foremost among them are "code duplication" and "greater review burden." But neither of these drawbacks matter much for tests; duplicated test code isn't ideal, but since test reuse is fairly rare in practice, it's much less important than duplication in libraries or key infrastructure. And since most reviewers dedicate less attention to every line in test code, it's less of a problem that AI might make tests more verbose than necessary.

With that profile of benefits in mind, GitClear lets you measure how much durable test code is being written by developers of varying AI engagement.

Most likely, you'll find your "Power Users" and "Regular Users" outperforming the rest when it comes to their volume of test output.

linkChurned Lines per AI Cohort

How much of the work that gets pushed to your default branch ends up being reworked within a week or two?

The Churn Lines by Cohort instruments the volume of churn for each tier of AI usage. AI has cultivated something of a reputation for generating lines that seem alright to a first glance, but later prove to require changes in order to operate as expected. If you find your highest tier AI users are also 2x or more likely to churn their code, it may be worth a conversation about taking more time to self-review one's work before pushing it to the repo.

linkReview Burden per AI Cohort

Are the developers that rely most on AI also the developers that consume the most Senior Developer (and all developer) time to review their work?

Often, yes. Research by GitClear and others have variously estimated the code review burden to have increased by 20-80% since pre-AI. That said, the correlation with "more time spent reviewing" could also be attributed to the greater prevalence of remote work, if such work results in developers becoming less aware of the domains in which their teammates are contributing. The Teammates Review Minutes per AI Cohort helps teams tease apart whether lengthy PR reviews are more common among developers with heavy AI use.

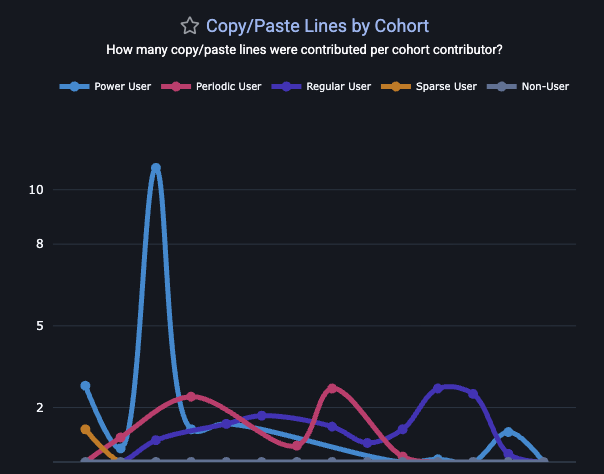

linkCopy/Paste Lines per AI Cohort

This is a graph that GitClear's AI Code Quality research has proven to be highly correlated with greater AI adoption.

The Copy/Paste Cohort graph lets you understand both "the extent to which AI is directly contributing to more copy/paste" and "when (on what tickets/project) is the copy/paste duplication occurring?" If unrealistic deadlines are a concern for a team, managers may find developers more willing to reduce their self-review on behalf of delivering a "resolved" ticket faster, even if that means seeding tech debt for future maintainers.

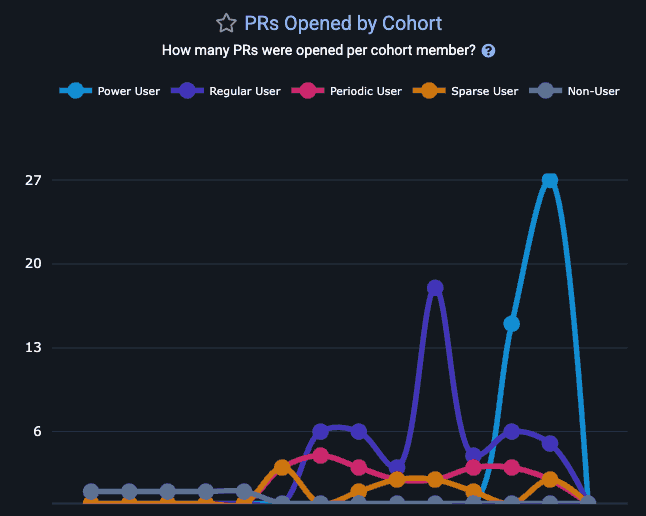

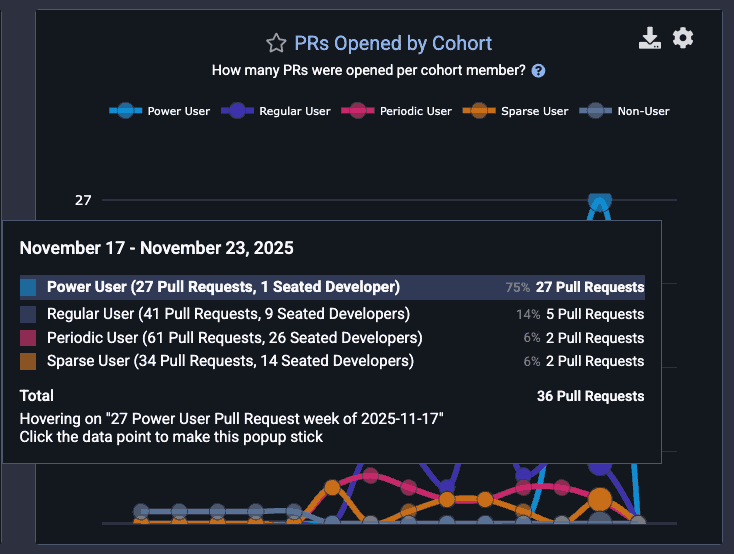

linkPull Requests Opened per AI Cohort

Do more avid AI users open more pull requests?

Use the "PRs Opened by Cohort" to understand the relation between "AI usage" and "tendency to open pull requests for review." It's not uncommon to find that the most avid AI user(s) will have an outsized impact on the total progress by the team, as can be seen upon hovering on a cohort:

linkProvider Limitations on Cohort Derivation

In order to estimate how different levels of activity translate to each GitClear metric, it's necessary to find API endpoints that provide AI data on a per-developer basis.

As of Q4 2025, Cursor is the most robust set of data. It reports per-developer historical stats for all sorts of different types of requests, along with lines added & deleted. If you spring for their fanciest enterprise subscription, you can even get line change counts down to a per-file granularity.

Claude Code is next best, with per-committer metrics available, along with per-model metrics that can inform your AI Usage reports. It allows months of historical processing and reports lines added & deleted, like Cursor.

Github Copilot is third best. It allows a variety of AI Usage metrics, but it generally doesn't break out usage per-model. Fortunately, it does allow calculating Cohort Stats. Unfortunately, it doesn't allow the stats to be calculated retroactively, so Cohort Stats only become available from the point that a user signs up for GitClear.

Anthropic is the weakest source of API data. But if we have customers that use non-Claude Code versions of Anthropic, feel free to reach out at hello@gitclear.com and we can discuss what is possible.