This proposal seeks a developer capable of working with the OS X Touch API to create a tool that can record how trackpad input corresponds to cursor movement in OS X. The proposal calls for two deliverables: a data collection utility, and a trackpad visualization utility.

Note that, in the document below, I refer to "trackpad" when I'm discussing the OS X touchpad specifically, since it matches the terminology used by Apple itself. When referring to Linux touchpads, I'll use the term "touchpad."

link1. Data collection utility

The structure to hold this data should be of the form:

Each of the Touch objects would include all relevant data from Apple's NSTouch class, plus a couple other that we can calculate from theirs. Something like this:

So, for example, one touchTimeslice might look like:

For maximum portability, the output of the data collection utility should be stored in JSON within a text file.

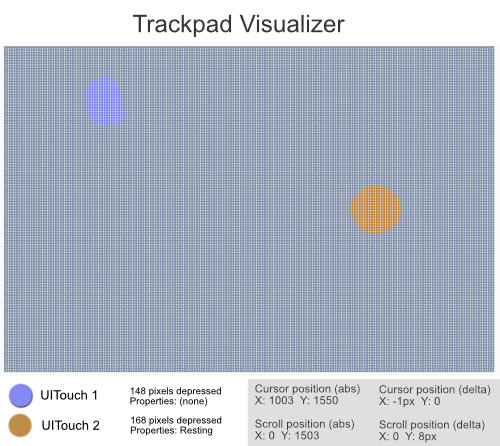

link2. Trackpad visualization utility

This tool supplements the data collection utility, allowing its work to be visualized in real time. It ought to look something like this:

Where the following are present:

Each existent pixel of the Mac trackpad is represented by a white dot

Each Touch object (area where a finger is touching trackpad) is illustrated by applying a color to the pixels that are depressed as a part of the touch

Current cursor position and scroll position

Delta (difference incurred during last render cycle) of cursor position and scroll position

linkPrior art

This is the best documentation from Apple I've found with examples of capturing trackpad location

There may be usable ideas in this app, which I haven't tried to get working but is described briefly here. This seems to be a relevant question from the developer that built the app.