GitClear calculates more than 30 different pull request metrics designed to lead a team toward actionable opportunities to improve communication and accelerate release velocity.

If you'd like to skip straight to learning about our sections, they are:

linkBig questions that GitClear Pull Request Stats offer data to help answer

Here are 10 questions that GitClear's PR stats can contribute data to help answer:

During what phases of the pull request process is the lion's share of your developer's work taking place? (see "Diff Delta by PR Stages")

Before the pull request is submitted for review. Perfect 👌. Only the contributor themselves needed to spend time on the work that occurred in this phase.

After the pull request is submitted for review. Expected, in moderation. A good litmus: if the total Line Impact accumulated during PR is less than what was accumulated before the PR was submitted, you are probably OK. If more work happens after review than before the PR was submitted, that implies that the contributor was lacking instruction/documentation or clarity necessary to perform their duties independently.

After the pull request is merged. Bad news. Implies that the QA and review process did not complete all work that was necessary for the pull request to be deployed without defects to production. 🐛

Who on the team has been the most prolific PR commenter? What are the downstream implications of these comments -- that is, do those who receive suggestions from this individual tend to act upon them?

Which PRs have been opened the longest, and what is holding the team back from getting them closed?

Are submitted PRs at or below the team's target size? How is that trending over time?

Are submitted PRs at or below the team's target maximum comments left per PR? How is that trending over time?

How is the Cycle Time (that is: time from first commit to merge) for pull requests changing as time passes? (A potential indicator of tech debt when it's increasing)

Are pull requests being merged without review? How often does that happen and what ensues after?

Is the team moving in generally a positive direction of having fewer pull requests opened for fewer total days?

Are there any assigned reviewers who have been especially tardy in offering their review?

What are the themes of the feedback that are received (e.g., "this could be a performance concern," "please follow the style guide," etc) on a per-contributor basis?

If you have struggled with questions like the above and would like to connect with a for-hire CTO who has experienced in answering these questions using GitClear, drop a line to bill@gitclear.com and we have referrals for highly rated Engineering Execs who can help you dig into the finer details in answer the above. GitClear does provide reports that can be used to answer these questions without a professional, but it takes some time to fully learn what stats are available, and how your team's values compare to industry benchmarks.

linkWhat reports are available?

GitClear's Pull Request Stats are divided between four tabs:

linkActivity Stats

High-level stats that show the bigger picture of what's happening across your repos or in a team:

Some of the stats available among Activity Stats

Read more about Activity Stats here: Classic Pull Request stats: how to optimize what matters for long-term health

linkOpen PRs

Granular list of pull requests that are underway (or were underway during the time range you might select):

View pull requests that are lingering (no recent work over last five days), and that are more actively on the way to being merged

This view provides a detailed, timeline-based view for all open pull requests during this time period. It can also answer questions like "Is this PR above our team's desired target for total Diff Delta or Comments?" (as evidenced by the presence of the red line). This view can highlight "red flag" PR data that might need more attention.

Read more about Open PR stats here: Kick-start lingering pull requests

linkClosed PRs

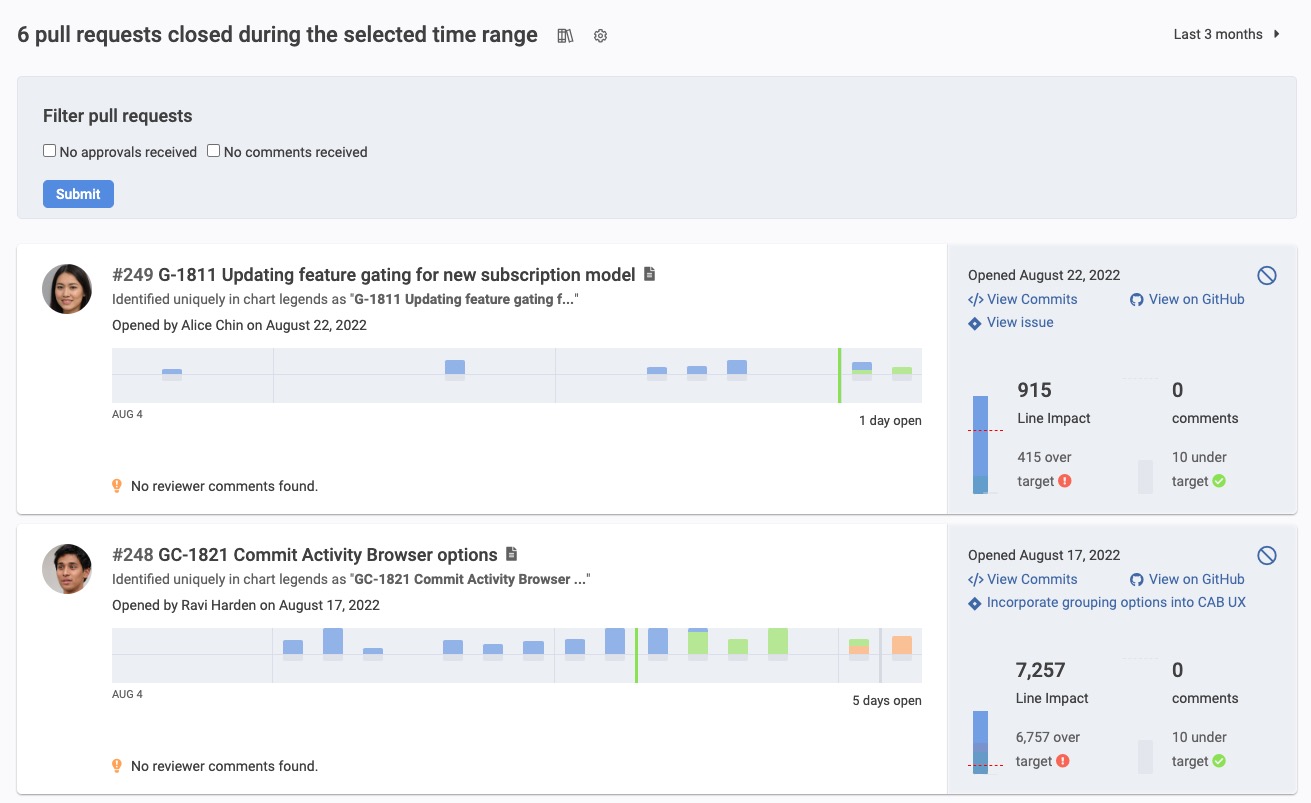

Granular list of pull requests that were recently completed:

Reassuring to see a team completing pull requests below the size targets (indicated by the green check marks) and not exceeding targets (indicated by the exclamation mark and red line)

This view provides a look into recently closed pull requests in the given time period, and the timeline of their progress from pre-review, to under review, to merged (including any activity revising this PR's lines after merge). This can make for an ideal item to look through for sprint retrospectives - which PRs went swimmingly well, and which required revisions, had delays, and accumulated post-merge work?

Read more about closed PR stats here: Learning from PR overruns during your retrospective meeting

linkCollaboration Stats

What are the patterns in how teammates interact? Focus on an entire team, repo, or an individual.

Compare stats for the current period vs. previous periods to get a sense for which direction the team is trending

Read more about Collaboration Stats in PR collaboration hygiene: are things getting better or worse? Is collaboration slowing velocity?

linkHow can I exclude a pull request from being factored into stats?

Read about that at Kick-start lingering pull requests#Exiling Pull Requests